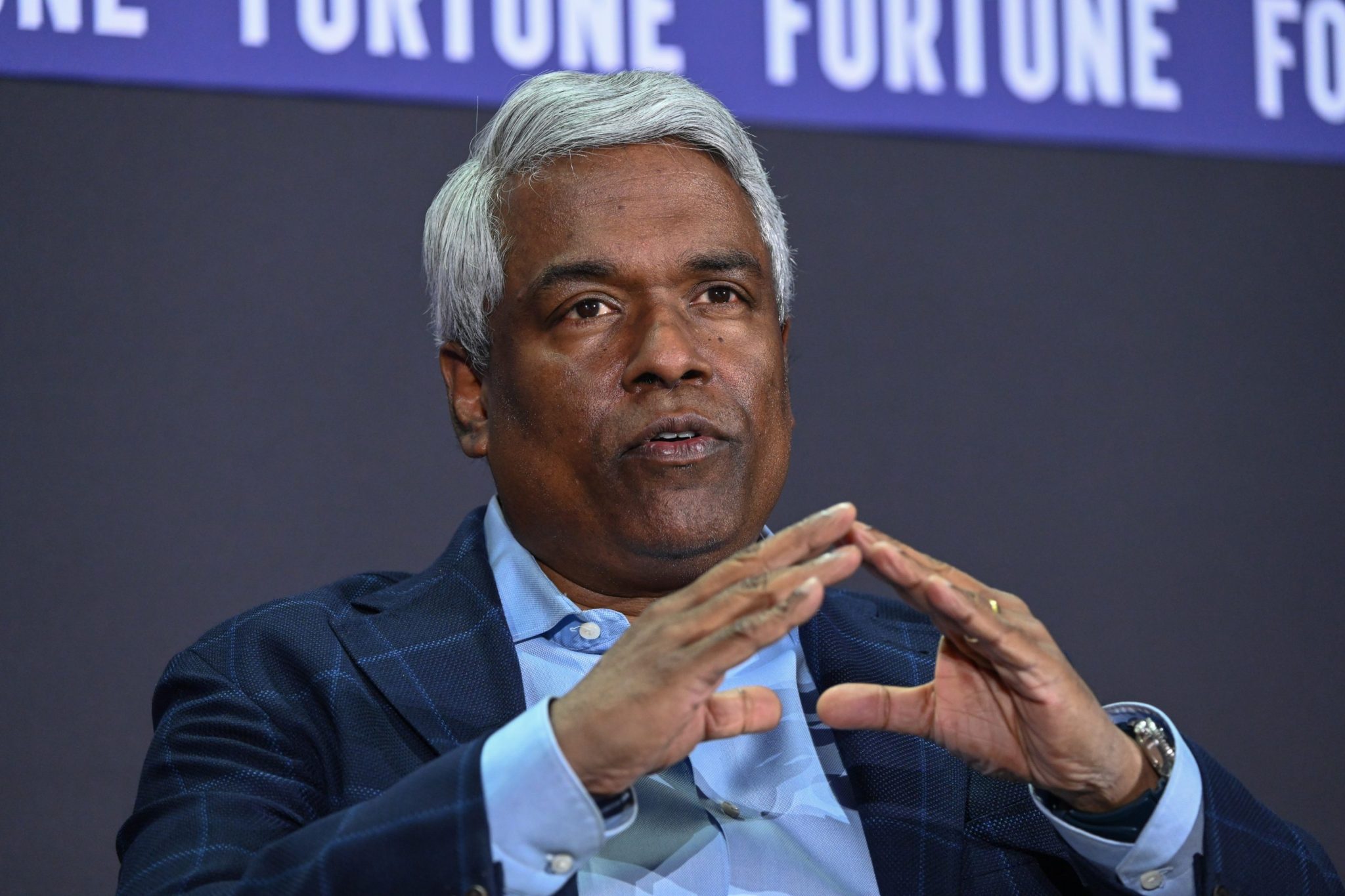

The immense electricity needs of AI computing was flagged early on as a bottleneck, prompting Alphabet’s Google Cloud to plan for how to source energy and how to use it, according to Google Cloud CEO Thomas Kurian.

Speaking at the Fortune Brainstorm AI event in San Francisco on Monday, he pointed out that the company—a key enabler in the AI infrastructure landscape—has been working on AI since well before large language models came along and took the long view.

“We also knew that the the most problematic thing that was going to happen was going to be energy, because energy and data centers were going to become a bottleneck alongside chips,” Kurian told Fortune’sAndrew Nusca. “So we designed our machines to be super efficient.”

The International Energy Agency has estimated that some AI-focused data centers consume as much electricity as 100,000 homes, and some of the largest facilities under construction could even use 20 times that amount.

At the same time, worldwide data center capacity will increase by 46% over the next two years, equivalent to a jump of almost 21,000 megawatts, according to real estate consultancy Knight Frank.

At the Brainstorm event, Kurian laid out Google Cloud’s three-pronged approach to ensuring that there will be enough energy to meet all that demand.

First, the company seeks to be as diversified as possible in the kinds of energy that power AI computation. While many people say any form of energy can be used, that’s actually not true, he said.

“If you’re running a cluster for training and you bring it up and you start running a training job, the spike that you have with that computation draws so much energy that you can’t handle that from some forms of energy production,” Kurian explained.

The second part of Google Cloud’s strategy is being as efficient as possible, including how it reuses energy within data centers, he added.

In fact, the company uses AI in its control systems to monitor thermodynamic exchanges necessary in harnessing the energy that has already been brought into data centers.

And third, Google Cloud is working on “some new fundamental technologies to actually create energy in new forms,” Kurian said without elaborating further.

Earlier on Monday, utility company NextEra Energy and Google Cloud said they are expanding their partnership and will develop new U.S. data center campuses that will include with new power plants as well.

Tech leaders have warned that energy supply is critical to AI development alongside innovations in chips and improved language models.

The ability to build data centers is another potential chokepoint as well. Nvidia CEO Jensen Huang recently pointed out China’s advantage on that front compared to the U.S.

“If you want to build a data center here in the United States, from breaking ground to standing up an AI supercomputer is probably about three years,” he said at the Center for Strategic and International Studies in late November. “They can build a hospital in a weekend.”

Leave a Reply